Lucas Lehnert

Assistant Professor

Department of Computer Science

University of Saskatchewan

© 2026 Lucas Lehnert.

Powered by Hyde & Jekyll.

I am a computer science professor at the University of Saskatchewan, specializing in Artificial Intelligence (AI) where I direct the

Laboratory of Intelligent Learning Agents and Algorithms.

I believe that gaining a deeper understanding of intelligence is essential for responsibly shaping the future of AI technologies and their impact on society.

Therefore, my ultimate goal is to push the boundaries of our algorithmic understanding of intelligence.

To serve this goal, my research currently focusses on how intelligent systems can effectively learn and build a knowledge base to reliably solve complex decision-making tasks.

My primary focus revolves around Reinforcement Learning (RL) and sequential decision making. Currently, I am particularly interested in the following topics:

Lucas Lehnert is an Assistant Professor of Computer Science at the University of Saskatchewan with a specialization in artificial intelligence and reinforcement learning. His research focusses on how intelligent systems can effectively learn and build a knowledge base to reliably solve complex decision-making tasks. Prior to joining the University of Saskatchewan, he held postdoctoral positions at Meta's FAIR team and at the Mila Quebec AI Institute. Lucas earned his PhD in Computer Science from Brown University in 2021 following his MSc and BSc in Computer Science at McGill University. His interdisciplinary contributions have been recognized with an NIMH training grant in cognitive neuroscience and a best student workshop paper award.

I am seeking an MSc thesis student (Fall 2026 start term) to work on a project in reinforcement learning and generative AI. If you are interested in a research opportunity that combines mathematics and algorithm design with deep learning, software engineering and development with PyTorch, please reach out to this email. In your email please explain your interest and attach your resume and unofficial transcript.

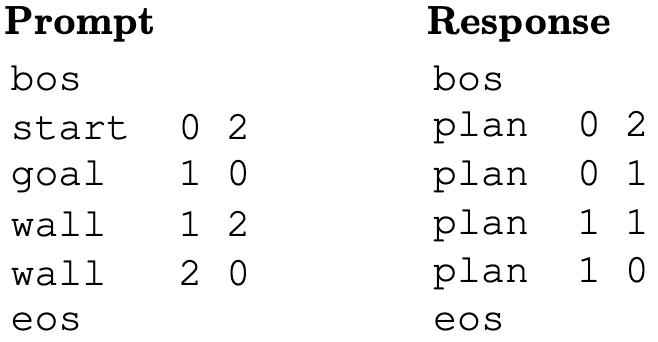

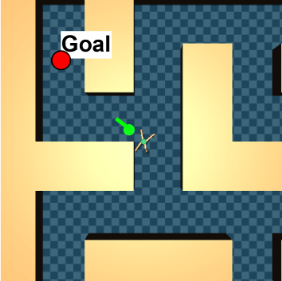

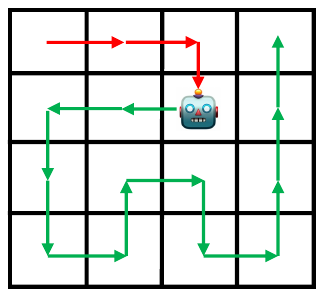

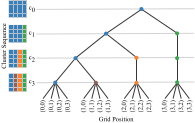

Lucas Lehnert, Sainbayar Sukhbaatar, DiJia Su, Qinqing Zheng, Paul Mcvay, Michael Rabbat, Yuandong Tian

Beyond A∗: Better Planning with Transformers via Search Dynamics Bootstrapping

First Conference on Language Modelling (COLM), 2024

[workshop paper]

[code]

Rohan Chitnis, Yingchen Xu, Bobak Hashemi, Lucas Lehnert, Urun Dogan, Zheqing Zhu, Olivier Delalleau

IQL-TD-MPC: Implicit Q-Learning for Hierarchical Model Predictive Control

2024 IEEE International Conference on Robotics and Automation (ICRA)

[arXiv]

[workshop paper]

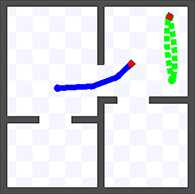

Arnav Kumar Jain, Lucas Lehnert, Irina Rish, Glen Berseth

Maximum State Entropy Exploration using Predecessor and Successor Representations

Advances in Neural Information Processing Systems 36 (NeurIPS 2023)

[arXiv]

[code]

[workshop paper]

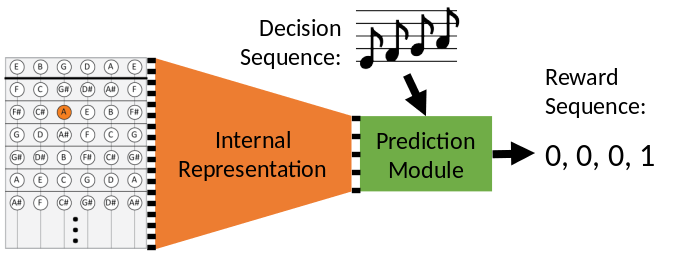

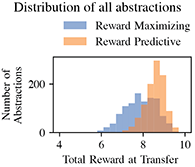

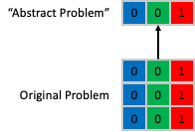

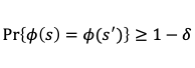

Lucas Lehnert, Michael J. Frank, and Michael L. Littman

Reward-predictive clustering

arXiv: 2211.03281 [cs.LG], 2022

Lucas Lehnert

Encoding Reusable Knowledge in State Representations

PhD Dissertation, Brown University, 2021

Lucas Lehnert, Michael L. Littman, and Michael J. Frank

Reward-predictive representations generalize across tasks in reinforcement learning

PLOS Computational Biology, 2020

[code]

[Docker Hub]

[bioRxiv]

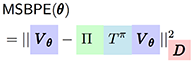

Lucas Lehnert and Michael L. Littman

Successor Features Combine Elements of Model-Free and Model-based Reinforcement Learning

Journal of Machine Learning Research (JMLR), 2020

[arXiv]

Lucas Lehnert and Michael L. Littman

Transfer with Model Features in Reinforcement Learning

Lifelong Learning: A Reinforcement Learning Approach workshop at FAIM, Stockholm, Sweden, 2018

[arXiv]

David Abel, Dilip S. Arumugam, Lucas Lehnert, and Michael L. Littman

State Abstractions for Lifelong Reinforcement Learning

Proceedings of the 35th International Conference on Machine Learning, PMLR 80:10-19, 2018

[pdf]

David Abel, Dilip Arumugam, Lucas Lehnert, and Michael L. Littman

Toward Good Abstractions for Lifelong Learning

NIPS workshop on Hierarchical Reinforcement Learning, 2017

[pdf]

Lucas Lehnert, Romain Laroche, and Harm van Seijen

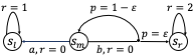

On Value Function Representation of Long Horizon Problems

In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, 2018

[pdf]

Lucas Lehnert, Stefanie Tellex, and Michael L. Littman

Advantages and Limitations of Using Successor Features for Transfer in Reinforcement Learning

Lifelong Learning: A Reinforcement Learning Approach workshop @ICML, Sydney, Australia, 2017

[arXiv]

Best Student Paper Award

Lucas Lehnert and Doina Precup

Using Policy Gradients to Account for Changes in Behavior Policies Under Off-policy Control

The 13th European Workshop on Reinforcement Learning (EWRL 2016) [pdf]

Lucas Lehnert and Doina Precup

Policy Gradient Methods for Off-policy Control

arXiv: 1512.04105 [cs.AI], 2015

Lucas Lehnert

Off-policy control under changing behaviour

Master of Science Thesis, McGill University, 2017 [pdf]

Lucas Lehnert and Doina Precup

Building a Curious Robot for Mapping

Autonomously Learning Robots workshop (ALR 2014), NIPS 2014 [pdf]

Arthur Mensch, Emmanuel Piuze, Lucas Lehnert, Adrianus J. Bakermans, Jon Sporring, Gustav J. Strijkers, and Kaleem Siddiqi

Connection Forms for Beating the Heart. Statistical Atlases and Computational Models of the Heart - Imaging and Modelling Challenges

8896: 83-92. Springer International Publishing, 2014

Encoding Reusable Knowledge in State Representations

Invited talk at Mila Tea Talks, Mila - Quebec Artificial Intelligence Institute, Montréal, Canada, 2020 [recording]

Should intelligent agents learn how to behave optimally or learn how to predict future outcomes?

Invited talk at Structure for Efficient Reinforcement Learning (SERL) at RLDM 2019, Montréal, Canada, 2019

Transfer Learning Using Successor State Features

Invited talk at the workshop ICML’2017 RL late breaking results event, at ICML, Sydney, Australia, 2017 [slides]